Multi-byte characters and problem resolution when going from on-prem to OCI

When doing a data pump from on-prem to the cloud you will often hit errors with conversion errors.

Plenty of background information but little on resolving this, so putting this together as a reminder when going forward.

Typical errors when doing datapump -

ORA-02374: conversion error loading table "ABC"."STANDARD_LETTERS"

ORA-12899: value too large for column NAME (actual: 51, maximum: 50)

ORA-02372: data for row: NAME : 0X'4D65746572205374726174656779202D204D6574657220696E'

……..

. . imported "ABC"."STANDARD_LETTERS" 45.80 MB 1143 out of 1146 rows

…….

ORA-02374: conversion error loading table "ABC"."AFFECTED_ADDRESSES"

ORA-12899: value too large for column OCCUPIER_NAME (actual: 51, maximum: 50)

ORA-02372: data for row: OCCUPIER_NAME : 0X'4D52205354414E49534C41555320434841464553554B41202D'

…..

. . imported "ABC"."AFFECTED_ADDRESSES" 94.30 MB 10799942 out of 10799944 rows

......

One approach would be “data cleanse” the offending tables but this means having to search for multi-byte characters and often this can mean millions of rows.

Also, there can be a reluctance to change any source data (and can be problematic identifying those characters).

To avoid this, we have been changing the table data type and re-importing the data.

Using the above errors as examples -

For test db from source:

SQL> desc ABC.AFFECTED_ADDRESSES

Name Null? Type

----------------------------------------- -------- ----------------------------

..

OCCUPIER_NAME VARCHAR2(50)

..

You need to include the where clause for each of the offending rows –

This generates -

SET DEFINE OFF;

Insert into STANDARD_LETTERS

(STAN_ID, NAME, SHORT_NAME, FILENAME, EMAIL,

STAN_BODY, VALID_DATE, CURRENT_VALID, PREVIEW, DOC_SOURCE,

STAN_COVLET_STAN_ID)

Values

(100, 'Strategy - installaltion > £600 letter', 'MS8', 'MS8.doc', 'N',

'', TO_DATE('02/04/2009 12:06:33', 'DD/MM/YYYY HH24:MI:SS'), 'Y', NULL, NULL,

NULL);

COMMIT;

However :

SQL> Insert into STANDARD_LETTERS;

(STAN_ID, NAME, SHORT_NAME, FILENAME, EMAIL,

STAN_BODY, VALID_DATE, CURRENT_VALID, PREVIEW, DOC_SOURCE,

STAN_COVLET_STAN_ID)

Values

(100, 'Strategy - installaltion > £600 letter', 'MS8', 'MS8.doc', 'N',

'', TO_DATE('02/04/2009 12:06:33', 'DD/MM/YYYY HH24:MI:SS'), 'Y', NULL, NULL,

NULL);

(1001077, 'Strategy - installaltion > £600 letter', 'MS8', 'MS8.doc', 'N',

*

Also, there can be a reluctance to change any source data (and can be problematic identifying those characters).

To avoid this, we have been changing the table data type and re-importing the data.

Using the above errors as examples -

For test db from source:

SQL> desc ABC.AFFECTED_ADDRESSES

Name Null? Type

----------------------------------------- -------- ----------------------------

..

OCCUPIER_NAME VARCHAR2(50)

..

For the test db in OCI (we change to 50 char to allow for multi-byte characters) :

SQL>alter table ABC.AFFECTED_ADDRESSES modify OCCUPIER_NAME varchar2 (50 char);

SQL> desc ABC.AFFECTED_ADDRESSES

Name Null? Type

----------------------------------------- -------- ----------------------------

..

OCCUPIER_NAME VARCHAR2(50 CHAR)

..

Now on running the import for the table –

. . imported "ABC"."AFFECTED_ADDRESSES" 94.30 MB 10799944 rows

Remember table_exists_action=truncate in the parameter file to “clear” the table prior to importing the data.

While this approach works, if you have 1 row fail for a few million rows this is not ideal having to run an import again.

To get around this and to avoid having to change the column datatype, an option is to generate the missing rows from the source database.

From the datapump log we can see the offending row -

ORA-02374: conversion error loading table "ABC"."STANDARD_LETTERS"

ORA-12899: value too large for column NAME (actual: 51, maximum: 50)

ORA-02372: data for row: NAME : 0X'4D65746572205374726174656779202D204D6574657220696E'

Use script to identify row data (run for all the offending rows)….

SQL> select name FROM ABC.STANDARD_LETTERS WHERE NAME like '%' || utl_raw.cast_to_varchar2 ('4D65746572205374726174656779202D204D6574657220696E') || '%'

Strategy - installaltion £600 letter …etc

However, we still need to generate the insert statement to insert the problem row to the table.

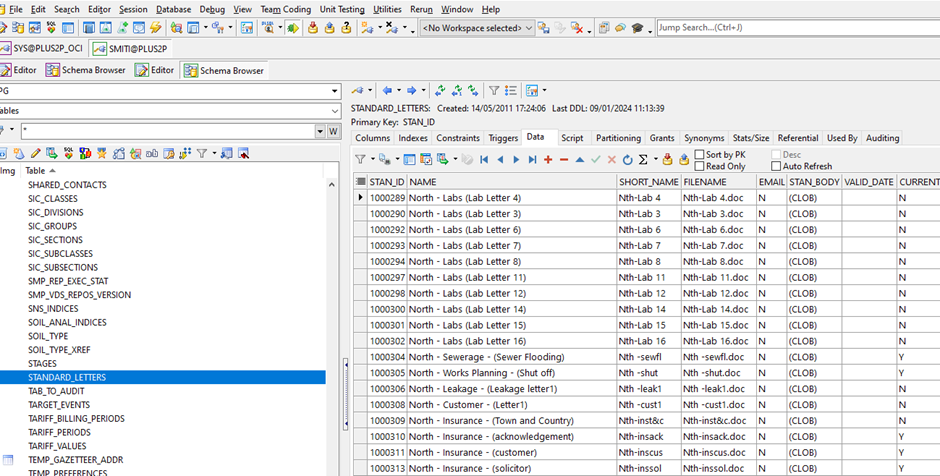

The easy option is to use Toad and select the table from the schema browser

From the export dataset button use the drop-down option and select “create insert statement”

SQL>alter table ABC.AFFECTED_ADDRESSES modify OCCUPIER_NAME varchar2 (50 char);

SQL> desc ABC.AFFECTED_ADDRESSES

Name Null? Type

----------------------------------------- -------- ----------------------------

..

OCCUPIER_NAME VARCHAR2(50 CHAR)

..

Now on running the import for the table –

. . imported "ABC"."AFFECTED_ADDRESSES" 94.30 MB 10799944 rows

Remember table_exists_action=truncate in the parameter file to “clear” the table prior to importing the data.

While this approach works, if you have 1 row fail for a few million rows this is not ideal having to run an import again.

To get around this and to avoid having to change the column datatype, an option is to generate the missing rows from the source database.

From the datapump log we can see the offending row -

ORA-02374: conversion error loading table "ABC"."STANDARD_LETTERS"

ORA-12899: value too large for column NAME (actual: 51, maximum: 50)

ORA-02372: data for row: NAME : 0X'4D65746572205374726174656779202D204D6574657220696E'

Use script to identify row data (run for all the offending rows)….

SQL> select name FROM ABC.STANDARD_LETTERS WHERE NAME like '%' || utl_raw.cast_to_varchar2 ('4D65746572205374726174656779202D204D6574657220696E') || '%'

Strategy - installaltion £600 letter …etc

However, we still need to generate the insert statement to insert the problem row to the table.

The easy option is to use Toad and select the table from the schema browser

From the export dataset button use the drop-down option and select “create insert statement”

You need to include the where clause for each of the offending rows –

This generates -

SET DEFINE OFF;

Insert into STANDARD_LETTERS

(STAN_ID, NAME, SHORT_NAME, FILENAME, EMAIL,

STAN_BODY, VALID_DATE, CURRENT_VALID, PREVIEW, DOC_SOURCE,

STAN_COVLET_STAN_ID)

Values

(100, 'Strategy - installaltion > £600 letter', 'MS8', 'MS8.doc', 'N',

'', TO_DATE('02/04/2009 12:06:33', 'DD/MM/YYYY HH24:MI:SS'), 'Y', NULL, NULL,

NULL);

COMMIT;

However :

SQL> Insert into STANDARD_LETTERS;

(STAN_ID, NAME, SHORT_NAME, FILENAME, EMAIL,

STAN_BODY, VALID_DATE, CURRENT_VALID, PREVIEW, DOC_SOURCE,

STAN_COVLET_STAN_ID)

Values

(100, 'Strategy - installaltion > £600 letter', 'MS8', 'MS8.doc', 'N',

'', TO_DATE('02/04/2009 12:06:33', 'DD/MM/YYYY HH24:MI:SS'), 'Y', NULL, NULL,

NULL);

(1001077, 'Strategy - installaltion > £600 letter', 'MS8', 'MS8.doc', 'N',

*

ERROR at line 6:

ORA-12899: value too large for column "ABC"."STANDARD_LETTERS"."NAME" (actual:

51, maximum: 50)

Which is the error we are seeing in datapump – not surprising.

Removing the offending “£” (tempted to correct the spelling of installation!)

We could have changed the datatype on the column to insert the pound but left “as is” to demonstrate the process.

SQL> Insert into STANDARD_LETTERS

(STAN_ID, NAME, SHORT_NAME, FILENAME, EMAIL,

STAN_BODY, VALID_DATE, CURRENT_VALID, PREVIEW, DOC_SOURCE,

STAN_COVLET_STAN_ID)

Values

(100, 'Strategy - installaltion > 600 letter', 'MS8', 'MS8.doc', 'N',

'', TO_DATE('02/04/2009 12:06:33', 'DD/MM/YYYY HH24:MI:SS'), 'Y', NULL, NULL,

NULL);

1 row created.

After running the above for all the offending rows - on checking the row counts (as expected).

SQL> select count(*) from ABC.AFFECTED_ADDRESSES;

COUNT(*)

----------

10799944

SQL> select count(*) from ABC.STANDARD_LETTERS;

COUNT(*)

----------

1146

Ensure to test on the test environment prior to updating any prod database,

This is quite a simple example and worth further reading - any comments please let me know.

ORA-12899: value too large for column "ABC"."STANDARD_LETTERS"."NAME" (actual:

51, maximum: 50)

Which is the error we are seeing in datapump – not surprising.

Removing the offending “£” (tempted to correct the spelling of installation!)

We could have changed the datatype on the column to insert the pound but left “as is” to demonstrate the process.

SQL> Insert into STANDARD_LETTERS

(STAN_ID, NAME, SHORT_NAME, FILENAME, EMAIL,

STAN_BODY, VALID_DATE, CURRENT_VALID, PREVIEW, DOC_SOURCE,

STAN_COVLET_STAN_ID)

Values

(100, 'Strategy - installaltion > 600 letter', 'MS8', 'MS8.doc', 'N',

'', TO_DATE('02/04/2009 12:06:33', 'DD/MM/YYYY HH24:MI:SS'), 'Y', NULL, NULL,

NULL);

1 row created.

After running the above for all the offending rows - on checking the row counts (as expected).

SQL> select count(*) from ABC.AFFECTED_ADDRESSES;

COUNT(*)

----------

10799944

SQL> select count(*) from ABC.STANDARD_LETTERS;

COUNT(*)

----------

1146

Ensure to test on the test environment prior to updating any prod database,

This is quite a simple example and worth further reading - any comments please let me know.

I may update this with a scripted solution and provide further detail going forward.